This is the second of a four-part writeup based on the talk "Five Years without QA: What I learned, What I missed, and What I wish I could change for a better software quality culture" that I presented at SOFTCON 2022: Metaverse Continuum held online on October 26, 2022.

Part 1 of this series can be found here.

I have an unpopular opinion, at least for Philippine software shops: I think that QA testing doesn't fit-in with Agile methodologies. Handing off development work to a separate QA tester for scrutiny harkens back to the Waterfall model of software development, where verification was a separate activity to implementation.

The view that third party verification by a QA tester or analyst as an activity separate from software implementation is so pervasive that someone asked this question during the SOFTCON talk. I had expected adverse reactions to the topic, and this question sums it up:

Can devs wear the QA hat without biases to their work or other devs work? Did the number of bugs get reduced without QA and did the delivery time get reduced without QA? I ask as almost all industries have QA.

This was a very loaded question, and to best answer it I have to break it down to three parts, namely:

- The belief that "QA testers are needed to perform an unbiased verification of quality in the work of developers"

- The impact of not having QA on bug count and delivery time

- All industries have dedicated QA analysts, hence software development has to have QA analysts

Let's address these questions one at a time.

"QA testers are needed to perform an unbiased verification of quality in the work of developers"

This comes from the very first part, asking: "can devs wear the QA hat without biases to their work or other devs work?" It presents a picture of software developers as biased, and therefore, lacking credibility in objectively verifying their work or others' work, hence presenting the need of an independent third party in the form of a QA tester.

Without being antagonistic against QA testers, a counter-question to this line would be "Why would you hire developers you don't trust?"

While it is true that software developers aren't immune to overlooking specifications or missing out details – they are human after all – this cross check can be done by other developers, especially if every day work in an organization requires them to do so. Peer reviews, verification on beta or staging environments, and automated tests are all mechanisms by which we validate our work.

Beyond these, the Product Trio (Product Manager, Technical Lead, and Experience Designers) should ultimately be accountable for two things. First, they need to verify that the output aligns with the product and technical designs that they themselves defined in the user stories that developers implement. Second, they have to re-check if the solution solves the problem that prompted its implementation in the first place.

It's very important that the Product Trio validate the understanding of developers in the final product, and while the most ideal scenario is to achieve alignment at the beginning of the development effort, some misunderstandings and erroneous assumptions only really reveal themselves with the final implementation of the code. However, it's equally important for the Product Trio to validate that the software in its final form does solve the problem.

Perhaps that's one thing QA could actually lend a hand on.

The impact of not having QA on bug count and delivery time

Not having QA will be slower at first, but it becomes faster over time.

For a team that is shifting away from manual testing and moving into writing automated tests, implementing peer reviews, etc., the process change and more importantly, cultural change will not be easy. It will be hard at first, but as the team gains proficiency in the practices, they will end up going faster. If the software developers are already proficient in automated test practices and peer review processes before joining the organization, or if the organization itself had put these practices in play for a long time, the overhead for writing unit tests and the peer review process will be negligible.

In fact, I would argue that teams will move faster – way faster – than when they did have QA. That's because the delays caused by the handover of user stories from software developer to QA tester will be absent. This circles back to the Power of Small Batches I talked about in the previous article.

The speed of delivery, in turn, contributes to the tightening of feedback loops as the product gets to Product Managers (aka product owners) and ultimately, to end users much faster. Tight feedback loops, coupled with the review processes in place during development, mean that really severe bugs get surfaced earlier in the process. That ultimately leads to less bugs, not more. It may not eliminate all of the bugs, but any organization that claims that they have released zero bug software would be exaggerating, to say the least.

All industries have dedicated QA analysts, so software development has to have QA analysts

Finally, there's the assumption that because other industries have QA analysts, software developers have to have them to. That's actually true – there is value that QA professionals can provide to software organizations. It's just that it isn't in the way that they are currently being used, at least not in the Philippine software industry context that I'm familiar with.

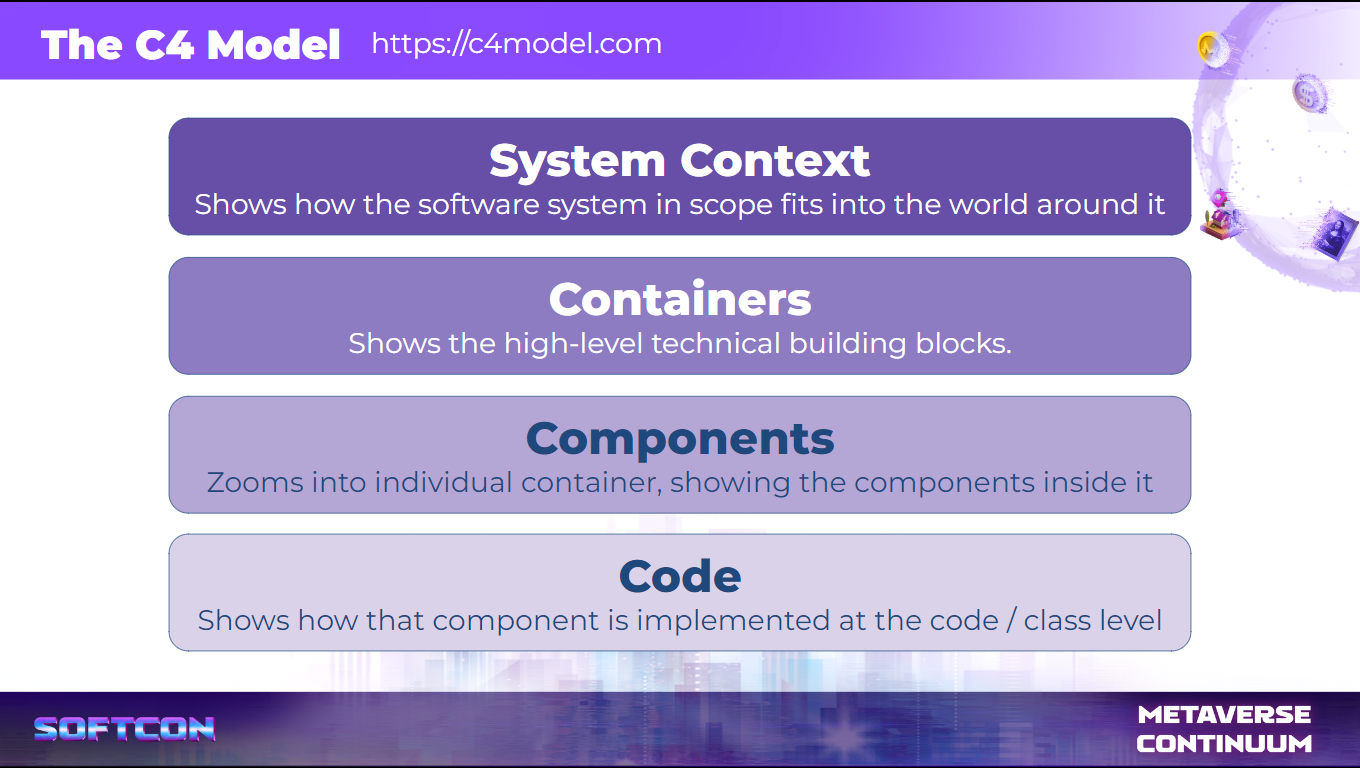

In the C4 model for visualising software architecture describes software in four levels: System Context, Containers, Components and Code. In a manner of speaking, these levels correspond to the distance each one has from physical concepts. System Context, for example, describes how humans interact with software systems, as well as interactions with external software systems. The Code level, on the other end, describes the program code compiled or interpreted as computer instructions.

By the very nature of their jobs, software developers would be most familiar with the Code perspective of a software system, while QA testers or analysts would be more familiar with the Systems Context level. This – dare I say – polar separation presents a unique opportunity for collaboration: QA professionals can balance software developers' code-centric context with a cross-functional system-centric context that is closer to clients.

QA testers can likewise give more balance to testing techniques, as developers test with a white box perspective, while QA testers will come with a black box perspective. Organizations can opt to have their QA attend more to integration testing than user-story testing. Finally, more experienced QA professionals will have insights on security, regression testing, compliance with laws and regulations, usability, user experience in terms of business workflow, and even performance of the product.

Software testing is not assembly line QA

A final note that I'd like to put in regarding QA in other industries, is that most of the time the parallels we draw for QA are taken from assembly line manufacturing. This is similar to the way software developers may have taken practices such as Kanban from manufacturing. Unfortunately, this paradigm of assembly line testing is utterly inappropriate for the kind of quality assurance work involved for software engineering.

Assembly line quality assurance usually involves random sampling of batches: when a distinct production run or batch of products is done, a QA personnel will take a random sample of that product and run tests against that sample, to check if production standards are met. On other manufacturing processes, a simple-enough test is applied to all samples that have come out of that production run. This is the most common illustration of what quality assurance work looks like in manufacturing.

Software engineering is fundamentally different from manufacturing due to the fact that every software release is different from the one before it. Every release has the potential to change the way the software and workflows work in subtle ways, and in that sense, unlike in manufacturing where a batch has defined attributes that describe what a quality product is, software applications simply do not have that baseline.

Sure, a QA tester might be holding on to a specification – but only end-users have the final say on whether a specification satisfactorily meets the real-world demands on a software solution. In that respect, software development is more akin to pottery: no two pots are the same, they come out differently every single release, and while there are well understood parameters that define a pot's quality like smoothness, or hardness, and resistance to chipping and cracking, how a pot responds to everyday use and the stresses of cooking or constant use will be evolving.

People should accept that ultimately, a piece of software may meet all known specifications, but a spec is not the whole picture of quality. A software specification is merely a hypothesis to how a real-life problem is solved. Only when software gets to the hands of its end users will one know whether the solution crafted by a team actually creates the value that business analysts or product managers predicted they would bring.

Others would argue that QA testers are still required because they're still needed to verify the spec. This is where automated testing tools ought to be used heavily – and if it turns out that writing automated tests bog down developers, the organization has the option to bring in Quality Engineers (QE) focused on those tasks.

But that this mean that we should get rid of manual QA testers, especially if they're still part of your organization? My answer is definitely not.

Post-development Quality Assurance

In my previous article I talked about post-QA software development, and there's a corresponding post-developer QA aspect to the discussion, going back to the systems context perspective that I talked about above.

Having QA shift their focus away from day-to-day software development and instead have them work with the Product Trio might, in the end, be more productive and meaningful. This allows QA professionals to not just work on ensuring alignment with specification but also work on usability, accessibility and inclusivity, security, compliance, and performance. Having knowledge with these areas, especially when coupled with Quality Engineering skills, would expand their horizons and better encompass a more wholistic quality picture in software development.

In the next article I'll address the elephant in the room: the adversarial relationship between software developers and quality assurance testers, and how to improve software quality culture.